BASE Mental Effort Monitoring Dataset

Description

This study was designed to investigate how mental effort in reading and understanding programs of different complexity can be measured by a set of sensors placed in the programmers (subjects) that participated in the experiment. We used the experimental setup of one of the experiment campaigns of the BASE initiative, which covers a comprehensive set of sensors including EEG with a 64-channel cap, ECG, EDA, and eye tracking with pupillography. The signals from all these sources are synchronized in a common time base to allow consistent cross analysis.

The study included 30 participants and all the subjects had experience in the Java programming language, selected for this study. The screening process included an interview to assign a programming level skill in Java to each participant as Intermediate, Advanced or Expert.

The study was approved by the Ethics Committee of our organization, in accordance with the Declaration of Helsinki, and all experiments were performed in accordance with relevant guidelines and regulations. Informed consent was signed by all participants. The anonymized data from this research will be available upon request to the authors.

3 small Java programs having different complexity were carefully designed to keep consistency in the programming style and to avoid that math or algorithm pose extra difficulties to the participants, not directly related to the code complexity:

– Program C1 counts the number of values existing in a given array that fall within a given interval using a straightforward loop.

– Program C2 multiplies two numbers using the basic algorithm where every digit from one number is multiplied by every digit from the other number, from right to left. The numbers are given as strings and the algorithm includes converting the strings to byte arrays.

– Program C3 seeks occurrences of an integer cubic array inside a larger cubic array, trying to find the larger occurrence of the smaller one inside the larger one. The algorithm for this program has a high cyclomatic complexity having many nested loops.

The table below presents a summary of the complexity metrics of the three programs. In C1 and C3 the algorithm is coded in one function, while in C2 the algorithm is spread across two functions, possibly making C2 easier to read than C3, in spite of both having a comparable number of lines of code.

| Prog. | Lines of code | Nested Block Depth | No. params. | Cyclomatic complexity |

| C1 | 13 | 2 | 3 | 3 |

| C2 | 42 (12+30) | 3 | 3 | 4 |

| C3 | 49 | 5 | 4 | 15 |

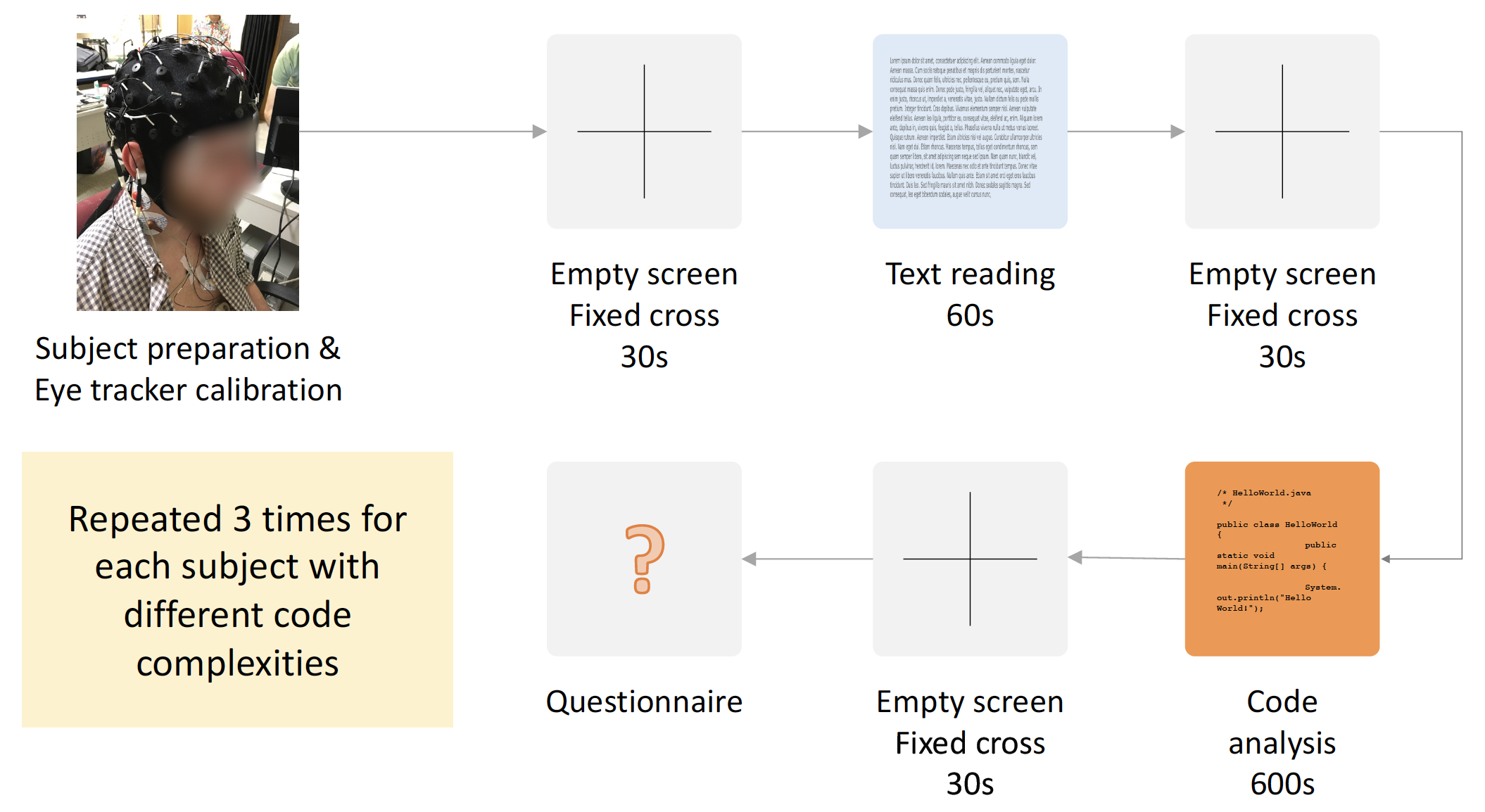

All the participants performed the experiments in the same room, without distractions, noise or presence of people unrelated to the experiments. The protocol includes the following steps, performed on the screen of a laptop:

- Empty grey screen with a black cross in its center during 30 seconds. It serves as baseline phase in which the participant does not perform any activity.

- Screen with a text in natural language to be read by the participant (60 seconds max.). This step serves as reference activity (different from code understanding) for the purpose of data analysis.

- Empty grey screen with a black cross for 30 seconds.

- Screen displays the code in Java of the program to be analyzed for code comprehension (C1, C2, C3). This step last 10 minutes maximum.

The protocol scheme is presented below.

Parameters

Time series

- EDA – Eletrodermal Activity

- ECG – Electrocardiogram

- PPG – Photoplethysmogram

- ICG – Impedance Cardiogram

- EEG – Electroencephalogram (64 channels)

Eye tracking data

- Raw data position

- Pupil diameter (left and right eye)

- Cornea reflex position (left and right eye)

- Point of regard (gaze data / left and right eye)

- Head position / Rotation

Meta data

- Screening evaluation data

- NASA-TLX evaluation data

Annotations

- Experts code annotation

Aux data

- Images presented to the volunteers

Access:

This database is available to logged users using the link below.

How to cite this database:

Hijazi, H., Duraes, J., Couceiro, R., Castelhano, J., Barbosa, R., Medeiros, J., … & Madeira, H. (2022). Quality evaluation of modern code reviews through intelligent biometric program comprehension. IEEE Transactions on Software Engineering, 49(2), 626-645.

Hijazi, H., Cruz, J., Castelhano, J., Couceiro, R., Castelo-Branco, M., de Carvalho, P., & Madeira, H. (2021). iReview: An intelligent code review evaluation tool using biofeedback. In 2021 IEEE 32nd International Symposium on Software Reliability Engineering (ISSRE) (pp. 476-485). IEEE.

Couceiro, R. and Barbosa, R. and Joao Duraes and Duarte, G. and Castelhano, J. and Duarte, C. and Teixeira, C. and Laranjeiro, N. and Medeiros, J. and Castelo-Branco, M. and P. Carvalho and Madeira, H. , “Spotting problematic code lines using nonintrusive programmers’ biofeedback”, in 30th International Symposium on Software Reliability Engineering (ISSRE 2019), 2019